UPDATE: I’ve posted a very classy email response from Friston here.

In a “comments and controversies“ piece published in NeuroImage last week, Karl Friston describes “Ten ironic rules for non-statistical reviewers“. As the title suggests, the piece is presented ironically; Friston frames it as a series of guidelines reviewers can follow in order to ensure successful rejection of any neuroimaging paper. But of course, Friston’s real goal is to convince you that the practices described in the commentary are bad ones, and that reviewers should stop picking on papers for such things as having too little power, not cross-validating results, and not being important enough to warrant publication.

Friston’s piece is, simultaneously, an entertaining satire of some lamentable reviewer practices, and—in my view, at least—a frustratingly misplaced commentary on the relationship between sample size, effect size, and inference in neuroimaging. While it’s easy to laugh at some of the examples Friston gives, many of the positions Friston presents and then skewers aren’t just humorous portrayals of common criticisms; they’re simply bad caricatures of comments that I suspect only a small fraction of reviewers ever make. Moreover, the cures Friston proposes—most notably, the recommendation that sample sizes on the order of 16 to 32 are just fine for neuroimaging studies—are, I’ll argue, much worse than the diseases he diagnoses.

Before taking up the objectionable parts of Friston’s commentary, I’ll just touch on the parts I don’t think are particularly problematic. Of the ten rules Friston discusses, seven seem palatable, if not always helpful:

- Rule 6 seems reasonable; there does seem to be excessive concern about the violation of assumptions of standard parametric tests. It’s not that this type of thing isn’t worth worrying about at some point, just that there are usually much more egregious things to worry about, and it’s been demonstrated that the most common parametric tests are (relatively) insensitive to violations of normality under realistic conditions.

- Rule 10 is also on point; given that we know the reliability of peer review is very low, it’s problematic when reviewers make the subjective assertion that a paper just isn’t important enough to be published in such-and-such journal, even as they accept that it’s technically sound. Subjective judgments about importance and innovation should be left to the community to decide. That’s the philosophy espoused by open-access venues like PLoS ONE and Frontiers, and I think it’s a good one.

- Rules 7 and 9—criticizing a lack of validation or a failure to run certain procedures—aren’t wrong, but seem to me much too broad to support blanket pronouncements. Surely much of the time when reviewers highlight missing procedures, or complain about a lack of validation, there are perfectly good reasons for doing so. I don’t imagine Friston is really suggesting that reviewers should stop asking authors for more information or for additional controls when they think it’s appropriate, so it’s not clear what the point of including this here is. The example Friston gives in Rule 9 (of requesting retinotopic mapping in an olfactory study), while humorous, is so absurd as to be worthless as an indictment of actual reviewer practices. In fact, I suspect it’s so absurd precisely because anything less extreme Friston could have come up with would have caused readers to think, “but wait, that could actually be a reasonable concern“¦“

- Rules 1, 2, and 3 seem reasonable as far as they go; it’s just common sense to avoid overconfidence, arguments from emotion, and tardiness. Still, I’m not sure what’s really accomplished by pointing this out; I doubt there are very many reviewers who will read Friston’s commentary and say “you know what, I’m an overconfident, emotional jerk, and I’m always late with my reviews–I never realized this before.“ I suspect the people who fit that description—and for all I know, I may be one of them—will be nodding and chuckling along with everyone else.

This leaves Rules 4, 5, and 8, which, conveniently, all focus on a set of interrelated issues surrounding low power, effect size estimation, and sample size. Because Friston’s treatment of these issues strikes me as dangerously wrong, and liable to send a very bad message to the neuroimaging community, I’ve laid out some of these issues in considerably more detail than you might be interested in. If you just want the direct rebuttal, skip to the “Reprising the rules“ section below; otherwise the next two sections sketch Friston’s argument for using small sample sizes in fMRI studies, and then describe some of the things wrong with it.

Friston’s argument

Friston’s argument is based on three central claims:

- Classical inference (i.e., the null hypothesis testing framework) suffers from a critical flaw, which is that the null is always false: no effects (at least in psychology) are ever truly zero. Collect enough data and you will always end up rejecting the null hypothesis with probability of 1.

- Researchers care more about large effects than about small ones. In particular, there is some size of effect that any given researcher will call “˜trivial’, below which that researcher is uninterested in the effect.

- If the null hypothesis is always false, and if some effects are not worth caring about in practical terms, then researchers who collect very large samples will invariably end up identifying many effects that are statistically significant but completely uninteresting.

I think it would be hard to dispute any of these claims. The first one is the source of persistent statistical criticism of the null hypothesis testing framework, and the second one is self-evidently true (if you doubt it, ask yourself whether you would really care to continue your research if you knew with 100% confidence that all of your effects would never be any larger than one one-thousandth of a standard deviation). The third one follows directly from the first two.

Where Friston’s commentary starts to depart from conventional wisdom is in the implications he thinks these premises have for the sample sizes researchers should use in neuroimaging studies. Specifically, he argues that since large samples will invariably end up identifying trivial effects, whereas small samples will generally only have power to detect large effects, it’s actually in neuroimaging researchers’ best interest not to collect a lot of data. In other words, Friston turns what most commentators have long considered a weakness of fMRI studies—their small sample size—into a virtue.

Here’s how he characterizes an imaginary reviewer’s misguided concern about low power:

Reviewer: Unfortunately, this paper cannot be accepted due to the small number of subjects. The significant results reported by the authors are unsafe because the small sample size renders their design insufficiently powered. It may be appropriate to reconsider this work if the authors recruit more subjects.

Friston suggests that the appropriate response from a clever author would be something like the following:

Response: We would like to thank the reviewer for his or her comments on sample size; however, his or her conclusions are statistically misplaced. This is because a significant result (properly controlled for false positives), based on a small sample indicates the treatment effect is actually larger than the equivalent result with a large sample. In short, not only is our result statistically valid. It is quantitatively more significant than the same result with a larger number of subjects.

This is supported by an extensive appendix (written non-ironically), where Friston presents a series of nice sensitivity and classification analyses intended to give the reader an intuitive sense of what different standardized effect sizes mean, and what the implications are for the detection of statistically significant effects using a classical inference (i.e., hypothesis testing) approach. The centerpiece of the appendix is a loss-function analysis where Friston pits the benefit of successfully detecting a large effect (which he defines as a Cohen’s d of 1, i.e., an effect of one standard deviation) against the cost of rejecting the null when the effect is actually trivial (defined as a d of 0.125 or less). Friston notes that the loss function is minimized (i.e., the difference between the hit rate for large effects and the miss rate for trivial effects is maximized) when n = 16, which is where the number he repeatedly quotes as a reasonable sample size for fMRI studies comes from. (Actually, as I discuss in my Appendix I below, I think Friston’s power calculations are off, and the right number, even given his assumptions, is more like 22. But the point is, it’s a small number either way.)

It’s important to note that Friston is not shy about asserting his conclusion that small samples are just fine for neuroimaging studies—especially in the Appendices, which are not intended to be ironic. He makes claims like the following:

The first appendix presents an analysis of effect size in classical inference that suggests the optimum sample size for a study is between 16 and 32 subjects. Crucially, this analysis suggests significant results from small samples should be taken more seriously than the equivalent results in oversized studies.

And:

In short, if we wanted to optimise the sensitivity to large effects but not expose ourselves to trivial effects, sixteen subjects would be the optimum number.

And:

In short, if you cannot demonstrate a significant effect with sixteen subjects, it is probably not worth demonstrating.

These are very strong claims delivered with minimal qualification, and given Friston’s influence, could potentially lead many reviewers to discount their own prior concerns about small sample size and low power—which would be disastrous for the field. So I think it’s important to explain exactly why Friston is wrong and why his recommendations regarding sample size shouldn’t be taken seriously.

What’s wrong with the argument

Broadly speaking, there are three problems with Friston’s argument. The first one is that Friston presents the absolute best-case scenario as if it were typical. Specifically, the recommendation that a sample of 16 ““ 32 subjects is generally adequate for fMRI studies assumes that  fMRI researchers are conducting single-sample t-tests at an uncorrected threshold of p < .05; that they only care about effects on the order of 1 sd in size; and that any effect smaller than d = .125 is trivially small and is to be avoided. If all of this were true, an n of 16 (or rather, 22—see Appendix I below) might be reasonable. But it doesn’t really matter, because if you make even slightly less optimistic assumptions, you end up in a very different place. For example, for a two-sample t-test at p < .001 (a very common scenario in group difference studies), the optimal sample size, according to Friston’s own loss-function analysis, turns out to be 87 per group, or 174 subjects in total.

I discuss the problems with the loss-function analysis in much more detail in Appendix I below; the main point here is that even if you take Friston’s argument at face value, his own numbers put the lie to the notion that a sample size of 16 ““ 32 is sufficient for the majority of cases. It flatly isn’t. There’s nothing magic about 16, and it’s very bad advice to suggest that authors should routinely shoot for sample sizes this small when conducting their studies given that Friston’s own analysis would seem to demand a much larger sample size the vast majority of the time.

What about uncertainty?

The second problem is that Friston’s argument entirely ignores the role of uncertainty in drawing inferences about effect sizes. The notion that an effect that comes from a small study is likely to be bigger than one that comes from a larger study may be strictly true in the sense that, for any fixed p value, the observed effect size necessarily varies inversely with sample size. It’s true, but it’s also not very helpful. The reason it’s not helpful is that while the point estimate of statistically significant effects obtained from a small study will tend to be larger, the uncertainty around that estimate is also greater—and with sample sizes in the neighborhood of 16 – 20, will typically be so large as to be nearly worthless. For example, a correlation of r = .75 sounds huge, right? But when that correlation is detected at a threshold of p < .001 in a sample of 16 subjects, the corresponding 99.9% confidence interval is .06 – .95—a range so wide as to be almost completely uninformative.

Fortunately, what Friston argues small samples can do for us indirectly—namely, establish that effect sizes are big enough to care about—can be done much more directly, simply by looking at the uncertainty associated with our estimates. That’s exactly what confidence intervals are for. If our goal is to ensure that we only end up talking about results big enough to care about, it’s surely better to answer the question “how big is the effect?” by saying, “d = 1.1, with a 95% confidence interval of 0.2 – 2.1” than by saying “well it’s statistically significant at p < .001 in a sample of 16 subjects, so it’s probably pretty big”. In fact, if you take the latter approach, you’ll be wrong quite often, for the simple reason that p values will generally be closer to the statistical threshold with small samples than with big ones. Remember that, by definition, the point at which one is allowed to reject the null hypothesis is also the point at which the relevant confidence interval borders on zero. So it doesn’t really matter whether your sample is small or large; if you only just barely managed to reject the null hypothesis, you cannot possibly be in a good position to conclude that the effect is likely to be a big one.

As far as I can tell, Friston completely ignores the role of uncertainty in his commentary. For example, he gives the following example, which is supposed to convince you that you don’t really need large samples:

Imagine we compared the intelligence quotient (IQ) between the pupils of two schools. When comparing two groups of 800 pupils, we found mean IQs of 107.1 and 108.2, with a difference of 1.1. Given that the standard deviation of IQ is 15, this would be a trivial effect size “¦ In short, although the differential IQ may be extremely significant, it is scientifically uninteresting “¦ Now imagine that your research assistant had the bright idea of comparing the IQ of students who had and had not recently changed schools. On selecting 16 students who had changed schools within the past five years and 16 matched pupils who had not, she found an IQ difference of 11.6, where this medium effect size just reached significance. This example highlights the difference between an uninformed overpowered hypothesis test that gives very significant, but uninformative results and a more mechanistically grounded hypothesis that can only be significant with a meaningful effect size.

But the example highlights no such thing. One is not entitled to conclude, in the latter case, that the true effect must be medium-sized just because it came from a small sample. If the effect only just reached significance, the confidence interval by definition just barely excludes zero, and we can’t say anything meaningful about the size of the effect, but only about its sign (i.e., that it was in the expected direction)—which is (in most cases) not nearly as useful.

In fact, we will generally be in a much worse position with a small sample than a large one, because at least with a large sample, we at least stand a chance of being able to distinguish small effects from large ones. Recall that Friston suggests against collecting very large samples for the very reason that they are likely to produce a wealth of statistically-significant-but-trivially-small effects. Well, maybe so, but so what? Why would it be a bad thing to detect trivial effects so long as we were also in an excellent position to know that those effects were trivial? Nothing about the hypothesis-testing framework commits us to treating all of our statistically significant results like they’re equally important. If we have a very large sample, and some of our effects have confidence intervals from 0.02 to 0.15 while others have CIs from 0.42 to 0.52, we would be wise to focus most of our attention on the latter rather than the former. At the very least this seems like a more reasonable approach than deliberately collecting samples so small that they will rarely be able to tell us anything meaningful about the size of our effects.

What about the prior?

The third, and arguably biggest, problem with Friston’s argument is that it completely ignores the prior—i.e., the expected distribution of effect sizes across the brain. Friston’s commentary assumes a uniform prior everywhere; for the analysis to go through, one has to believe that trivial effects and very large effects are equally likely to occur. But this is patently absurd; while that might be true in select situations, by and large, we should expect small effects to be much more common than large ones. In a previous commentary (on the Vul et al “voodoo correlations“ paper), I discussed several reasons for this; rather than go into detail here, I’ll just summarize them:

- It’s frankly just not plausible to suppose that effects are really as big as they would have to be in order to support adequately powered analyses with small samples. For example, a correlational analysis with 20 subjects at p < .001 would require a population effect size of r = .77 to have 80% power. If you think it’s plausible that focal activation in a single brain region can explain 60% of the variance in a complex trait like fluid intelligence or extraversion, I have some property under a bridge I’d like you to come by and look at.

- The low-hanging fruit get picked off first. Back when fMRI was in its infancy in the mid-1990s, people could indeed publish findings based on samples of 4 or 5 subjects. I’m not knocking those studies; they taught us a huge amount about brain function. In fact, it’s precisely because they taught us so much about the brain that researchers can no longer stick 5 people in a scanner and report that doing a working memory task robustly activates the frontal cortex. Nowadays, identifying an interesting effect is more difficult—and if that effect were really enormous, odds are someone would have found it years ago. But this shouldn’t surprise us; neuroimaging is now a relatively mature discipline, and effects on the order of 1 sd or more are extremely rare in most mature fields (for a nice review, see Meyer et al (2001)).

- fMRI studies with very large samples invariably seem to report much smaller effects than fMRI studies with small samples. This can only mean one of two things: (a) large studies are done much more poorly than small studies (implausible—if anything, the opposite should be true); or (b) the true effects are actually quite small in both small and large fMRI studies, but they’re inflated by selection bias in small studies, whereas large studies give an accurate estimate of their magnitude (very plausible).

- Individual differences or between-group analyses, which have much less power than within-subject analyses, tend to report much more sparing activations. Again, this is consistent with the true population effects being on the small side.

To be clear, I’m not saying there are never any large effects in fMRI studies. Under the right circumstances, there certainly will be. What I’m saying is that, in the absence of very good reasons to suppose that a particular experimental manipulation is going to produce a large effect, our default assumption should be that the vast majority of (interesting) experimental contrasts are going to produce diffuse and relatively weak effects.

Note that Friston’s assertion that “if one finds a significant effect with a small sample size, it is likely to have been caused by a large effect size“ depends entirely on the prior effect size distribution. If the brain maps we look at are actually dominated by truly small effects, then it’s simply not true that a statistically significant effect obtained from a small sample is likely to have been caused by a large effect size. We can see this easily by thinking of a situation in which an experiment has a weak but very diffuse effect on brain activity. Suppose that the entire brain showed “˜trivial’ effects of d = 0.125 in the population, and that there were actually no large effects at all. A one-sample t-test at p < .001 has less than 1% power to detect this effect, so you might suppose, as Friston does, that we could discount the possibility that a significant effect would have come from a trivial effect size. And yet, because a whole-brain analysis typically involves tens of thousands of tests, there’s a very good chance such an analysis will end up identifying statistically significant effects somewhere in the brain. Unfortunately, because the only way to identify a trivial effect with a small sample is to capitalize on chance (Friston discusses this point in his Appendix II, and additional treatments can be found in Ionnadis (2008), or in my 2009 commentary), that tiny effect won’t look tiny when we examine it; it will in all likelihood look enormous.

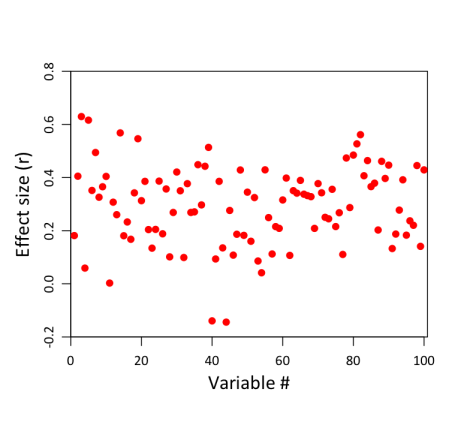

Since they say a picture is worth a thousand words, here’s one (from an unpublished paper in progress):

The top panel shows you a hypothetical distribution of effects (Pearson’s r) in a 2-dimensional “˜brain’ in the population. Note that there aren’t any astronomically strong effects (though the white circles indicate correlations of .5 or greater, which are certainly very large). The bottom panel shows what happens when you draw random samples of various sizes from the population and use different correction thresholds/approaches. You can see that the conclusion you’d draw if you followed Friston’s advice—i.e., that any effect you observe with n = 20 must be pretty robust to survive correction—is wrong; the isolated region that survives correction at FDR = .05, while “˜real’ in a trivial sense, is not in fact very strong in the true map—it just happens to be grossly inflated by sampling error. This is to be expected; when power is very low but the number of tests you’re performing is very large, the odds are good that you’ll end up identifying some real effect somewhere in the brain–and the estimated effect size within that region will be grossly distorted because of the selection process.

Encouraging people to use small samples is a sure way to ensure that researchers continue to publish highly biased findings that lead other researchers down garden paths trying unsuccessfully to replicate “˜huge’ effects. It may make for an interesting, more publishable story (who wouldn’t rather talk about the single cluster that supports human intelligence than about the complex, highly distributed pattern of relatively weak effects?), but it’s bad science. It’s exactly the same problem geneticists confronted ten or fifteen years ago when the first candidate gene and genome-wide association studies (GWAS) seemed to reveal remarkably strong effects of single genetic variants that subsequently failed to replicate. And it’s the same reason geneticists now run association studies with 10,000+ subjects and not 300.

Unfortunately, the costs of fMRI scanning haven’t come down the same way the costs of genotyping have, so there’s tremendous resistance at present to the idea that we really do need to routinely acquire much larger samples if we want to get a clear picture of how big effects really are. Be that as it may, we shouldn’t indulge in wishful thinking just because of logistical constraints. The fact that it’s difficult to get good estimates doesn’t mean we should pretend our bad estimates are actually good ones.

What’s right with the argument

Having criticized much of Friston’s commentary, I should note that there’s one part I like a lot, and that’s the section on protected inference in Appendix I. The point Friston makes here is that you can still use a standard hypothesis testing approach fruitfully—i.e., without falling prey to the problem of classical inference—so long as you explicitly protect against the possibility of identifying trivial effects. Friston’s treatment is mathematical, but all he’s really saying here is that it makes sense to use non-zero ranges instead of true null hypotheses. I’ve advocated the same approach before (e.g., here), as I’m sure many other people have. The point is simple: if you think an effect of, say, 1/8th of a standard deviation is too small to care about, then you should define a ‘pseudonull’ hypothesis of d = -.125 to .125 instead of a null of exactly zero.

Once you do that, any time you reject the null, you’re now entitled to conclude with reasonable certainty that your effects are in fact non-trivial in size. So I completely agree with Friston when he observes in the conclusion to the Appendix I that:

…the adage “˜you can never have enough data’ is also true, provided one takes care to protect against inference on trivial effect sizes ““ for example using protected inference as described above.

Of course, the reason I agree with it is precisely because it directly contradicts Friston’s dominant recommendation to use small samples. In fact, since rejecting non-zero values is more difficult than rejecting a null of zero, when you actually perform power calculations based on protected inference, it becomes immediately apparent just how inadequate samples on the order of 16 ““ 32 subjects will be most of the time (e.g., rejecting a null of zero when detecting an effect of d = 0.5 with 80% power using a one-sample t-test at p < .05 requires 33 subjects, but if you want to reject a “˜trivial’ effect size of d <= |.125|, that n is now upwards of 50).

Reprising the rules

With the above considerations in mind, we can now turn back to Friston’s rules 4, 5, and 8, and see why his admonitions to reviewers are uncharitable at best and insensible at worst. First, Rule 4 (the under-sampled study). Here’s the kind of comment Friston (ironically) argues reviewers should avoid:

Reviewer: Unfortunately, this paper cannot be accepted due to the small number of subjects. The significant results reported by the authors are unsafe because the small sample size renders their design insufficiently powered. It may be appropriate to reconsider this work if the authors recruit more subjects.

Perhaps many reviewers make exactly this argument; I haven’t been an editor, so I don’t know (though I can say that I’ve read many reviews of papers I’ve co-reviewed and have never actually seen this particular variant). But even if we give Friston the benefit of the doubt and accept that one shouldn’t question the validity of a finding on the basis of small samples (i.e., we accept that p values mean the same thing in large and small samples), that doesn’t mean the more general critique from low power is itself a bad one. To the contrary, a much better form of the same criticism–and one that I’ve raised frequently myself in my own reviews–is the following:

Reviewer: the authors draw some very strong conclusions in their Discussion about the implications of their main finding. But their finding issues from a sample of only 16 subjects, and the confidence interval around the effect is consequently very large, and nearly include zero. In other words, the authors’ findings are entirely consistent with the effect they report actually being very small–quite possibly too small to care about. The authors should either weaken their assertions considerably, or provide additional evidence for the importance of the effect.

Or another closely related one, which I’ve also raised frequently:

Reviewer: the authors tout their results as evidence that region R is ‘selectively’ activated by task T. However, this claim is based entirely on the fact that region R was the only part of the brain to survive correction for multiple comparisons. Given that the sample size in question is very small, and power to detect all but the very largest effects is consequently very low, the authors are in no position to conclude that the absence of significant effects elsewhere in the brain suggests selectivity in region R. With this small a sample, the authors’ data are entirely consistent with the possibility that many other brain regions are just as strongly activated by task T, but failed to attain significance due to sampling error. The authors should either avoid making any claim that the activity they observed is selective, or provide direct statistical support for their assertion of selectivity.

Neither of these criticisms can be defused by suggesting that effect sizes from smaller samples are likely to be larger than effect sizes from large studies. And it would be disastrous for the field of neuroimaging if Friston’s commentary succeeded in convincing reviewers to stop criticizing studies on the basis of low power. If anything, we collectively need to focus far greater attention on issues surrounding statistical power.

Next, Rule 5 (the over-sampled study):

Reviewer: I would like to commend the authors for studying such a large number of subjects; however, I suspect they have not heard of the fallacy of classical inference. Put simply, when a study is overpowered (with too many subjects), even the smallest treatment effect will appear significant. In this case, although I am sure the population effects reported by the authors are significant; they are probably trivial in quantitative terms. It would have been much more compelling had the authors been able to show a significant effect without resorting to large sample sizes. However, this was not the case and I cannot recommend publication.

I’ve already addressed this above; the problem with this line of reasoning is that nothing says you have to care equally about every statistically significant effect you detect. If you ever run into a reviewer who insists that your sample is overpowered and has consequently produced too many statistically significant effects, you can simply respond like this:

Response: we appreciate the reviewer’s concern that our sample is potentially overpowered. However, this strikes us as a limitation of classical inference rather than a problem with our study. To the contrary, the benefit of having a large sample is that we are able to focus on effect sizes rather than on rejecting a null hypothesis that we would argue is meaningless to begin with. To this end, we now display a second, more conservative, brain activation map alongside our original one that raises the statistical threshold to the point where the confidence intervals around all surviving voxels exclude effects smaller than d = .125. The reviewer can now rest assured that our results protect against trivial effects. We would also note that this stronger inference would not have been possible if our study had had a much smaller sample.

There is rarely if ever a good reason to criticize authors for having a large sample after it’s already collected. You can always raise the statistical threshold to protect against trivial effects if you need to; what you can’t easily do is magic more data into existence in order to shrink your confidence intervals.

Lastly, Rule 8 (exploiting “˜superstitious’ thinking about effect sizes):

Reviewer: It appears that the authors are unaware of the dangers of voodoo correlations and double dipping. For example, they report effect sizes based upon data (regions of interest) previously identified as significant in their whole brain analysis. This is not valid and represents a pernicious form of double dipping (biased sampling or non-independence problem). I would urge the authors to read Vul et al. (2009) and Kriegeskorte et al. (2009) and present unbiased estimates of their effect size using independent data or some form of cross validation.

Friston’s recommended response is to point out that concerns about double-dipping are misplaced, because the authors are typically not making any claims that the reported effect size is an accurate representation of the population value, but only following standard best-practice guidelines to include effect size measures alongside p values. This would be a fair recommendation if it were true that reviewers frequently object to the mere act of reporting effect sizes based on the specter of double-dipping; but I simply don’t think this is an accurate characterization. In my experience, the impetus for bringing up double-dipping is almost always one of two things: (a) authors getting overly excited about the magnitude of the effects they have obtained, or (b) authors conducting non-independent tests and treating them as though they were independent (e.g., when identifying an ROI based on a comparison of conditions A and B, and then reporting a comparison of A and C without considering the bias inherent in this second test). Both of these concerns are valid and important, and it’s a very good thing that reviewers bring them up.

The right way to determine sample size

If we can’t rely on blanket recommendations to guide our choice of sample size, then what? Simple: perform a power calculation. There’s no mystery to this; both brief and extended treatises on statistical power are all over the place, and power calculators for most standard statistical tests are available online as well as in most off-line statistical packages (e.g., I use the pwr package for R). For more complicated statistical tests for which analytical solutions aren’t readily available (e.g., fancy interactions involving multiple within- and between-subject variables), you can get reasonably good power estimates through simulation.

Of course, there’s no guarantee you’ll like the answers you get. Actually, in most cases, if you’re honest about the numbers you plug in, you probably won’t like the answer you get. But that’s life; nature doesn’t care about making things convenient for us. If it turns out that it takes 80 subjects to have adequate power to detect the effects we care about and expect, we can (a) suck it up and go for n = 80, (b) decide not to run the study, or (c) accept that logistical constraints mean our study will have less power than we’d like (which implies that any results we obtain will offer only a fractional view of what’s really going on). What we don’t get to do is look the other way and pretend that it’s just fine to go with 16 subjects simply because the last time we did that, we got this amazingly strong, highly selective activation that successfully made it into a good journal. That’s the same logic that repeatedly produced unreplicable candidate gene findings in the 1990s, and, if it continues to go unchecked in fMRI research, risks turning the field into a laughing stock among other scientific disciplines.

Conclusion

The point of all this is not to convince you that it’s impossible to do good fMRI research with just 16 subjects, or that reviewers don’t sometimes say silly things. There are many questions that can be answered with 16 or even fewer subjects, and reviewers most certainly do say silly things (I sometimes cringe when re-reading my own older reviews). The point is that blanket pronouncements, particularly when made ironically and with minimal qualification, are not helpful in advancing the field, and can be very damaging. It simply isn’t true that there’s some magic sample size range like 16 to 32 that researchers can bank on reflexively. If there’s any generalization that we can allow ourselves, it’s probably that, under reasonable assumptions, Friston’s recommendations are much too conservative. Typical effect sizes and analysis procedures will generally require much larger samples than neuroimaging researchers are used to collecting. But again, there’s no substitute for careful case-by-case consideration.

In the natural course of things, there will be cases where n = 4 is enough to detect an effect, and others where the effort is questionable even with 100 subjects; unfortunately, we won’t know which situation we’re in unless we take the time to think carefully and dispassionately about what we’re doing. It would be nice to believe otherwise; certainly, it would make life easier for the neuroimaging community in the short term. But since the point of doing science is to discover what’s true about the world, and not to publish an endless series of findings that sound exciting but don’t replicate, I think we have an obligation to both ourselves and to the taxpayers that fund our research to take the exercise more seriously.

Appendix I: Evaluating Friston’s loss-function analysis

In this appendix I review a number of weaknesses in Friston’s loss-function analysis, and show that under realistic assumptions, the recommendation to use sample sizes of 16 ““ 32 subjects is far too optimistic.

First, the numbers don’t seem to be right. I say this with a good deal of hesitation, because I have very poor mathematical skills, and I’m sure Friston is much smarter than I am. That said, I’ve tried several different power packages in R and finally resorted to empirically estimating power with simulated draws, and all approaches converge on numbers quite different from Friston’s. Even the sensitivity plots seem off by a good deal (for instance, Friston’s Figure 3 suggests around 30% sensitivity with n = 80 and d = 0.125, whereas all the sources I’ve consulted produce a value around 20%). In my analysis, the loss function is minimized at n = 22 rather than n = 16. I suspect the problem is with Friston’s approximation, but I’m open to the possibility that I’ve done something very wrong, and confirmations or disconfirmations are welcome in the comments below. In what follows, I’ll report the numbers I get rather than Friston’s (mine are somewhat more pessimistic, but the overarching point doesn’t change either way).

Second, there’s the statistical threshold. Friston’s analysis assumes that all of our tests are conducted without correction for multiple comparisions (i.e., at p < .05), but this clearly doesn’t apply to the vast majority of neuroimaging studies, which are either conducting massive univariate (whole-brain) analyses, or testing at least a few different ROIs or networks. As soon as you lower the threshold, the optimal sample size returned by the loss-function analysis increases dramatically. If the threshold is a still-relatively-liberal (for whole-brain analysis) p < .001, the loss function is now minimized at 48 subjects–hardly a welcome conclusion, and a far cry from 16 subjects. Since this is probably still the modal fMRI threshold, one could argue Friston should have been trumpeting a sample size of 48 all along—not exactly a “˜small’ sample size given the associated costs.

Third, the n = 16 (or 22) figure only holds for the simplest of within-subject tests (e.g., a one-sample t-test)–again, a best-case scenario (though certainly a common one). It doesn’t apply to many other kinds of tests that are the primary focus of a huge proportion of neuroimaging studies–for instance, two-sample t-tests, or interactions between multiple within-subject factors. In fact, if you apply the same analysis to a two-sample t-test (or equivalently, a correlation test), the optimal sample size turns out to be 82 (41 per group) at a threshold of p < .05, and a whopping 174 (87 per group) at a threshold of p < .001. In other words, if we were to follow Friston’s own guidelines, the typical fMRI researcher who aims to conduct a (liberal) whole-brain individual differences analysis should be collecting 174 subjects a pop. For other kinds of tests (e.g., 3-way interactions), even larger samples might be required.

Fourth, the claim that only large effects–i.e., those that can be readily detected with a sample size of 16–are worth worrying about is likely to annoy and perhaps offend any number of researchers who have perfectly good reasons for caring about effects much smaller than half a standard deviation. A cursory look at most literatures suggests that effects of 1 sd are not the norm; they’re actually highly unusual in mature fields. For perspective, the standardized difference in height between genders is about 1.5 sd; the validity of job interviews for predicting success is about .4 sd; and the effect of gender on risk-taking (men take more risks) is about .2 sd—what Friston would call a very small effect (for other examples, see Meyer et al., 2001). Against this backdrop, suggesting that only effects greater than 1 sd (about the strength of the association between height and weight in adults) are of interest would seem to preclude many, and perhaps most, questions that researchers currently use fMRI to address. Imaging genetics studies are immediately out of the picture; so too, in all likelihood, are cognitive training studies, most investigations of individual differences, and pretty much any experimental contrast that claims to very carefully isolate a relatively subtle cognitive difference. Put simply, if the field were to take Friston’s analysis seriously, the majority of its practitioners would have to pack up their bags and go home. Entire domains of inquiry would shutter overnight.

To be fair, Friston briefly considers the possibility that small sample sizes could be important. But he doesn’t seem to take it very seriously:

Can true but trivial effect sizes can ever be interesting? It could be that a very small effect size may have important implications for understanding the mechanisms behind a treatment effect ““ and that one should maximise sensitivity by using large numbers of subjects. The argument against this is that reporting a significant but trivial effect size is equivalent to saying that one can be fairly confident the treatment effect exists but its contribution to the outcome measure is trivial in relation to other unknown effects“¦

The problem with the latter argument is that the real world is a complicated place, and most interesting phenomena have many causes. A priori, it is reasonable to expect that the vast majority of effects will be small. We probably shouldn’t expect any single genetic variant to account for more than a small fraction of the variation in brain activity, but that doesn’t mean we should give up entirely on imaging genetics. And of course, it’s worth remembering that, in the context of fMRI studies, when Friston talks about “˜very small effect sizes,’ that’s a bit misleading; even medium-sized effects that Friston presumably allows are interesting could be almost impossible to detect at the sample sizes he recommends. For example, a one-sample t-test with n = 16 subjects detects an effect of d = 0.5 only 46% or 5% of the time at p < .05 and p < .001, respectively. Applying Friston’s own loss function analysis to detection of d = 0.5 returns an optimal sample size of n = 63 at p < .05 and n = 139 at p < .001—a message not entirely consistent with the recommendations elsewhere in his commentary.

![]() Friston, K. (2012). Ten ironic rules for non-statistical reviewers NeuroImage DOI: 10.1016/j.neuroimage.2012.04.018

Friston, K. (2012). Ten ironic rules for non-statistical reviewers NeuroImage DOI: 10.1016/j.neuroimage.2012.04.018